Java21 Virtual Threads: A New Era of Concurrency

Exploring Java 21 Virtual Threads: A New Era of Concurrency

Java 21 has introduced a Virtual Threads: milestone feature that has the potential to revolutionize the way developers approach concurrency (and by the way kill reactive programming).

Concurrency has always been a problem. Writing concurrent, secure and efficient code has never been an easy task. Especially if it was written in situations that did not require it at all. Most business applications will never come close to the traffic handled by the largest trading or steaming platforms, but programmers often like to complicate their lives by making improvements they don’t need.

Java 21 and Virtual Threads bring help to all. To those who need it and to those who think they need it.

What are Virtual Threads?

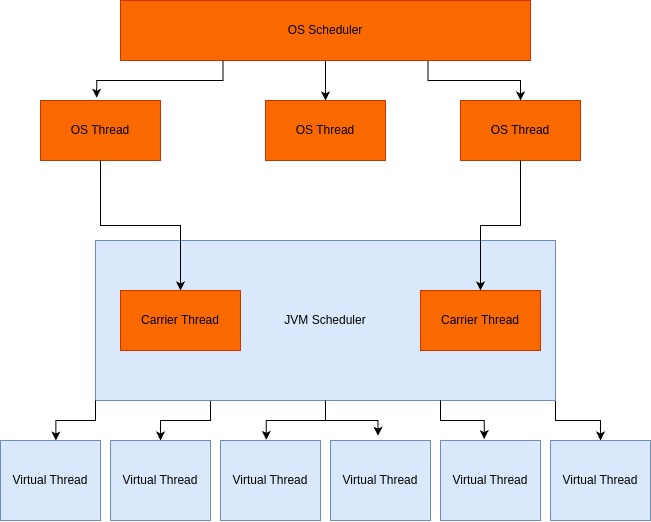

Virtual threads, also known as lightweight threads, are a new form of threading introduced in Java 21. Unlike traditional threads (often referred to as platform threads), which are managed by the operating system, virtual threads are managed by the Java Virtual Machine (JVM). This allows for the creation of a much larger number of threads without the significant overhead typically associated with OS-level threads.

Platform Threads overhead

To better understand the significant overhead we are talking about, it would be best to use the following example. Let’s imagine that our application thread needs to perform an request over the network in addition to standard business logic operations.

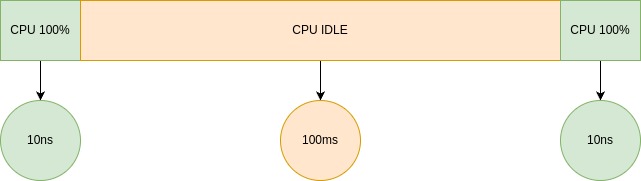

The analysis of CPU consumption in such a case could look like the following (the numerical values are to illustrate the order of magnitude, they are not exact numbers)

Therefore, we can calculate that approximately our CPU is busy only by the 0.0001% of time.

Accordingly, we can easily calculate that in order for our CPU to be 100% occupied in the thread per request model, we would need nearly 1 000 000 threads.

In theory, a thread created by the Linux OS, needs 8mb of virtual memory (Of course, this does not translate into physical memory consumption). The JVM needs about 2mb per new thread.

Therefore, creating a million threads in the thread per request model is not possible due to limited resources.

Asynchronous/Reactive programming to… make it even worse?

Instead of processing the entire request on a single thread, each segment of the request uses a thread from a pool, which can be reused by other tasks once the current task is complete. This approach reduces the number of threads needed but brings the complexity of asynchronous programming.

Asynchronous programming introduces its own concepts, requiring a learning period and potentially making your code more challenging to read and maintain. Different parts of a request might run on various threads, resulting in stack traces that lack clear context and making debugging anywhere from difficult to nearly impossible.

public class CallbackHellCompletableFutureExample {

public static void main(String[] args) {

CompletableFuture.supplyAsync(() -> {

// Simulate a long-running task

try { Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); }

return "Task 1";

}).thenApply(result1 -> {

// Callback hell starts here

return CompletableFuture.supplyAsync(() -> {

try { Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); }

return result1 + " + Task 2";

}).thenApply(result2 -> {

return CompletableFuture.supplyAsync(() -> {

try { Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); }

return result2 + " + Task 3";

}).thenApply(result3 -> {

return CompletableFuture.supplyAsync(() -> {

try { Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); }

return result3 + " + Task 4";

}).thenApply(result4 -> {

return CompletableFuture.supplyAsync(() -> {

try { Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); }

return result4 + " + Task 5";

}).join(); // Blocking call

}).join(); // Blocking call

}).join(); // Blocking call

}).join(); // Blocking call

}).join(); // Blocking call

}

}Virtual threads- lightweight alternative

Traditional threads are tied directly to operating system threads, each consuming a significant amount of memory and resources. Virtual threads, on the other hand, are managed by the JVM and can be multiplexed onto a smaller number of OS threads.

With affordable and lightweight threads, you can adopt the “one thread per request” model without concern for the number of threads required. When your code encounters a blocking I/O operation in a virtual thread, the runtime pauses the virtual thread until it can continue, allowing hardware to be used nearly optimally. This leads to high concurrency and, consequently, high throughput.

Due to their low cost, virtual threads don’t need to be reused or pooled. Each task is handled by its own virtual thread.

Benefits of Virtual Threads

- Performance and Scalability: Virtual threads allow you to scale your applications more effectively by reducing the overhead associated with thread management. You can handle more concurrent tasks with fewer resources.

- Simplified Code: Since virtual threads allow you to use a synchronous programming model, your code can be more straightforward and easier to understand compared to complex asynchronous models.

- Improved Resource Utilization: By decoupling the number of application-level threads from OS-level threads, virtual threads enable better resource utilization, making it possible to run many more concurrent tasks without hitting system limits.

Real life example where Virtual Threads fit perfectly

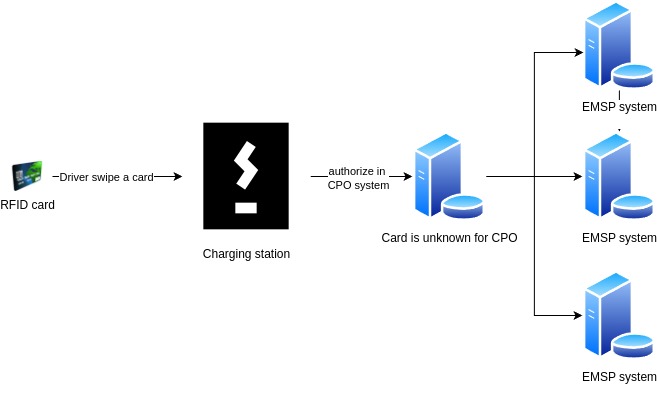

A good real life example where using Virtual Threads to perform parallel HTTP requests is advantageous is in real-time authorization defined by OCPI.

The Open Charge Point Interface (OCPI) is a protocol that facilitates communication between electric vehicle (EV) charging infrastructure and various service providers. It is used to streamline interactions between EV drivers, charging point operators (CPOs), and e-mobility service providers (eMSPs). One important aspect of this protocol is the real-time authorization process.

Real-Time Authorization Process in OCPI

The real-time authorization process defined by the OCPI standard involves several steps to ensure that an EV driver is authorized to use a charging station. Here’s an outline of the typical process:

- Initiation by EV Driver:

- The process begins when an EV driver arrives at a charging station and attempts to start a charging session. This can be done using an RFID card, or other authentication methods. In our example we focus on RFID card.

- Authorization Request:

- The charging station sends an authorization request to the CPO’s backend system. This request includes necessary details.

- Forwarding to eMSP:

- The CPO’s backend forwards the authorization request to the eMSP that the driver is registered with.

- Authorization Decision:

- The eMSP processes the authorization request by checking the driver’s credentials, account status, and any applicable tariffs or restrictions. Based on this information, the eMSP decides whether to approve or deny the request.

- Response to CPO:

- The eMSP sends a response back to the CPO’s backend indicating whether the authorization is approved or denied.

However, in practice CPO can be integrated with hundreds of eMSPs. Then, if the CPO does not know in advance which eMSP the RFID card belongs to, he has to ask everyone. This is defined by the OCPI standard:

When an eMSP does not want to Push the full list of tokens to CPOs, the CPOs will need to call the POST Authorize request to check if a Token is known by the eMSP, and if it is valid.

Real-Time Authorization Process in OCPI using Virtual Threads

Based on the requirements outlined above, as well as the expectations of how our system will work, we must ensure that:

- Requests will be sent to all eMSPs in parallel.

- The first one that makes a positive authorization will be served, and the rest ignored.

- Furthermore, if no one gives a positive authorization, the responses of each eMSPs will be forwarded for further analysis.

Pseudo-code using virtual threads can look as follows:

class RealTimeAuthorization {

RealTimeAuthorizationResult authorize() throws InterruptedException, ExecutionException {

final var realTimeAuthRequests = createRealTimeAuthRequest();

final var executorService = Executors.newVirtualThreadPerTaskExecutor();

final var executorCompletionService = new ExecutorCompletionService<RealTimeAuthorizationResponse>(executorService);

realTimeAuthRequests.forEach(executorCompletionService::submit);

final var failedResponseMessages = new ArrayList<RealTimeAuthorizationResponse>(realTimeAuthRequests.size());

do {

final var realTimeAuthorizationResponse = executorCompletionService.poll(timeout, TimeUnit.MILLISECONDS);

if (realTimeAuthorizationResponse != null) {

final var result = realTimeAuthorizationResponse.get();

if (result.success()) {

return new RealTimeAuthorizationResult("authorized");

} else {

failedResponseMessages.add(result);

}

}

} while (failedResponseMessages.size() != realTimeAuthRequests.size());

// further processing of failed messages

return new RealTimeAuthorizationResult("unauthorized");

}

}Virtual threads allow us to easily fulfill requirement number 1 and 3.

In addition, using the built-in ExecutorCompletionService class (which internally uses BlockingQueue), we can meet requirement number 2.

In addition, the defined timeout ensures that we will never wait indefinitely for a response.

The code looks clean and is easy to read. This can be seen especially if someone would be tempted to write the same thing using reactive programming.

Summary

Java 21 introduces Virtual Threads, a feature poised to transform concurrency handling in Java development. Historically, concurrency has been challenging, requiring complex and resource-intensive solutions. Traditional threads are managed by the operating system, leading to significant overhead, while asynchronous programming, often adopted to mitigate thread management issues, introduces complexity and maintainability challenges.

Virtual Threads, managed by the Java Virtual Machine (JVM), offer a lightweight alternative, allowing for the creation of many more threads without the usual overhead. This innovation enables a simpler and more efficient “one thread per request” model, enhancing performance, scalability, and resource utilization.

A practical example of Virtual Threads’ utility is seen in the real-time authorization process defined by the Open Charge Point Interface (OCPI) for electric vehicle charging. Virtual Threads facilitate the simultaneous handling of multiple authorization requests, simplifying code and improving efficiency compared to asynchronous programming.

In conclusion, Java 21’s Virtual Threads offer a groundbreaking approach to concurrency, simplifying code, enhancing performance, and making high-throughput applications more accessible to developers.